- NVIDIA CUDA TOOLKIT GUIDE INSTALL

- NVIDIA CUDA TOOLKIT GUIDE DRIVERS

- NVIDIA CUDA TOOLKIT GUIDE DRIVER

- NVIDIA CUDA TOOLKIT GUIDE MANUAL

- NVIDIA CUDA TOOLKIT GUIDE FULL

# Or older versions: tar -xzf cudnn-linux-圆4-v.tgz tar.xz package file: # New versions: tar -xf cudnn-linux-x86_64-_ It presents established parallelization and optimization techniques and explains coding metaphors and idioms that can greatly simplify programming for CUDA-capable GPU architectures.

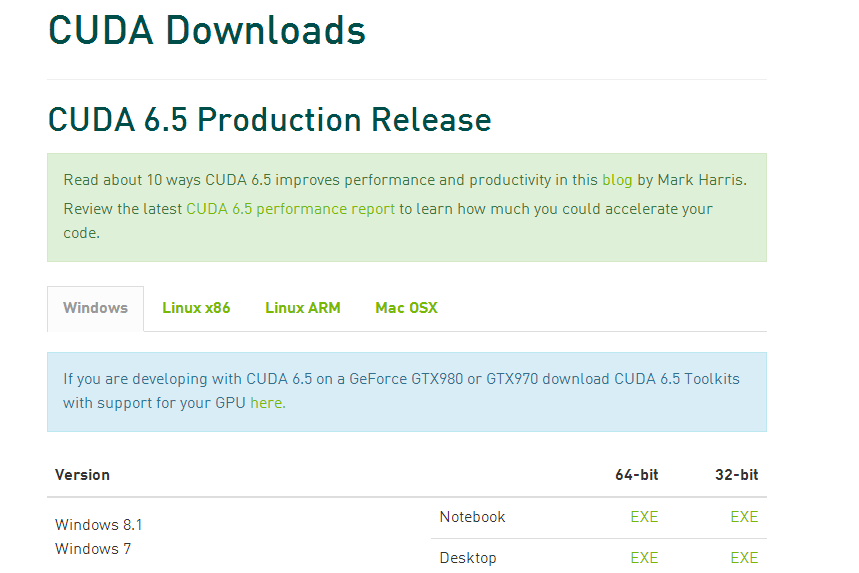

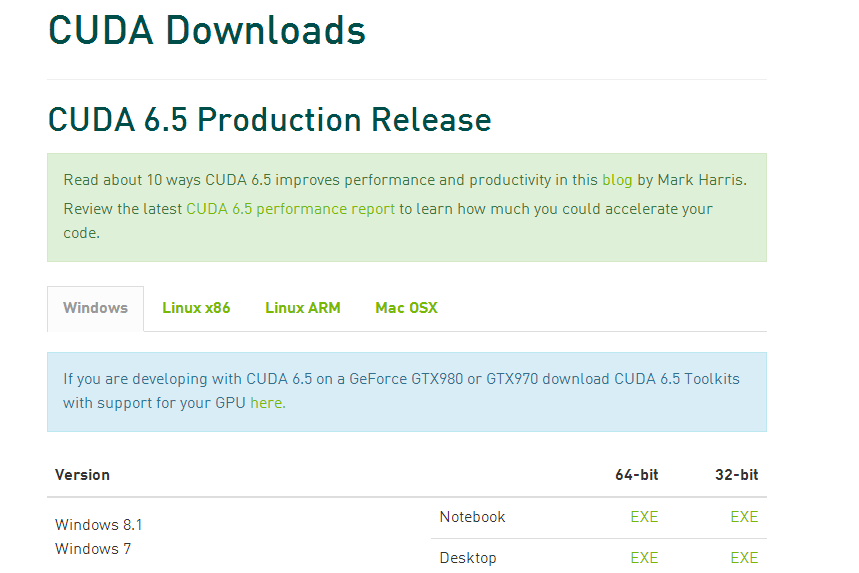

If you're using a command-line interface, just copy the download link from the NVIDIA website and use wget to download. This Best Practices Guide is a manual to help developers obtain the best performance from NVIDIA CUDA GPUs.Search and download the required version of CUDA ( cuda_***_n) installer file from this link:.

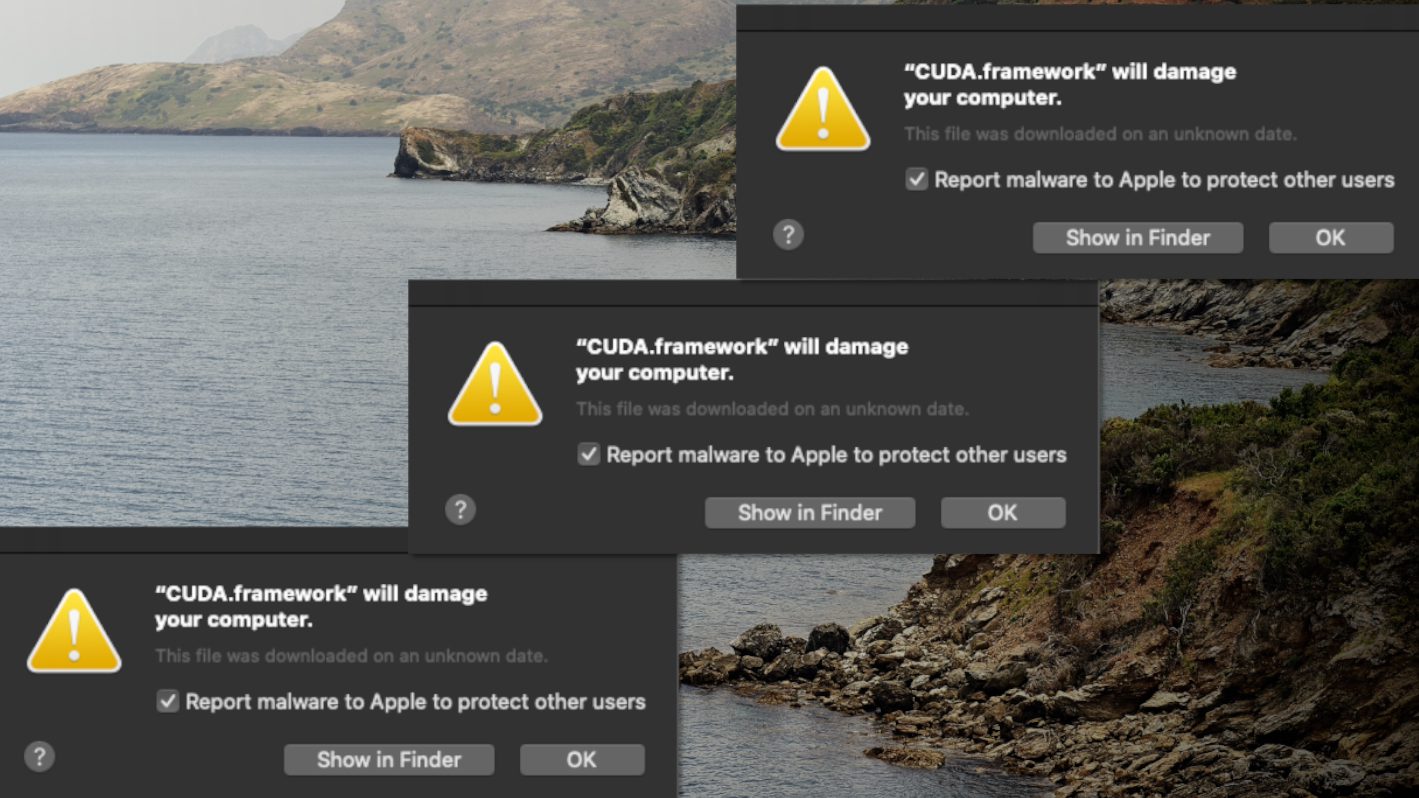

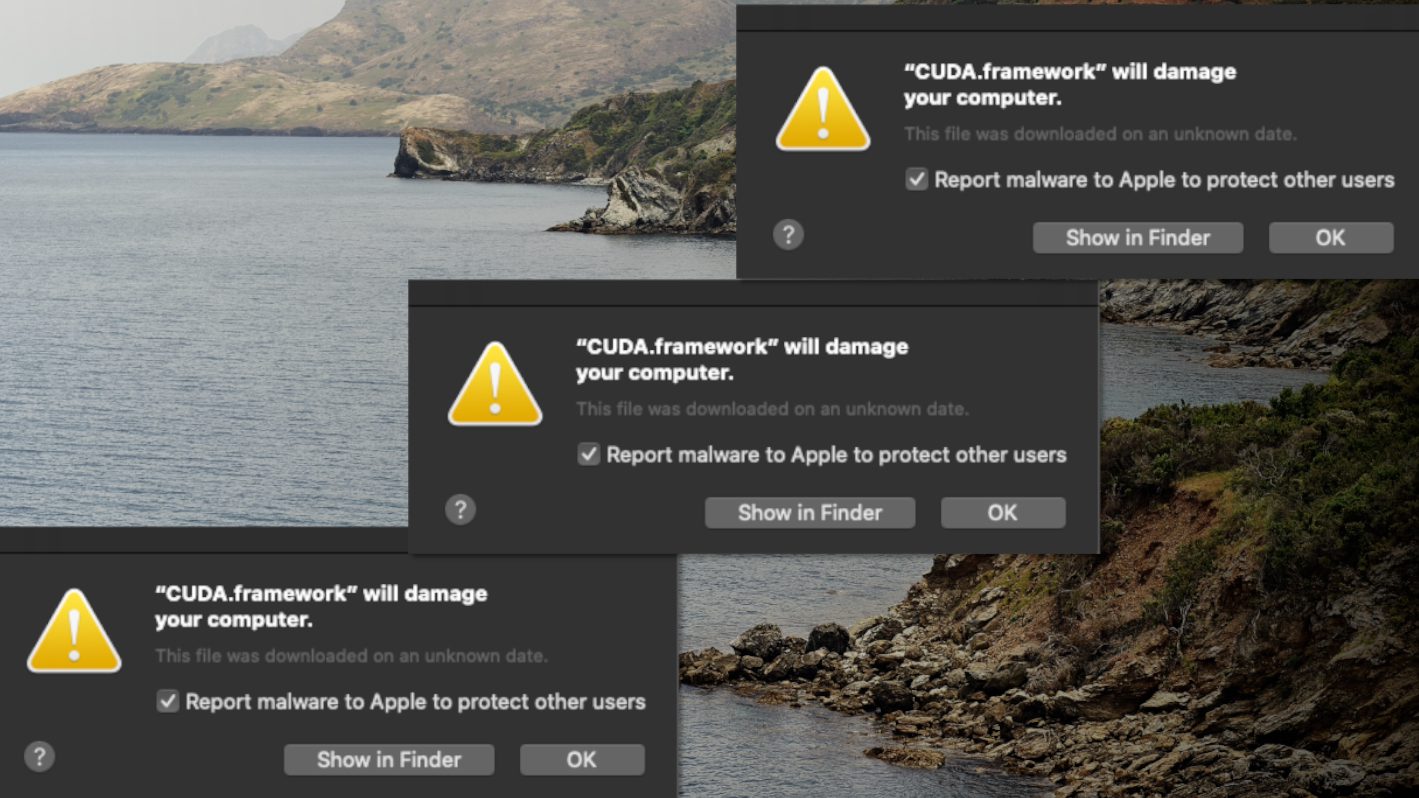

Moreover, if you want to use a different version of CUDA, never install CUDA.DEB file!

DEB file! It will overwrite the current default CUDA (if you installed it already) directory (/usr/local/cuda) and also it will overwrite the already installed latest compatible NVIDIA driver version into the old or incompatible driver.

RUN file! It can prevent installing incompatible NVIDIA drivers and prevent overwriting the previous /usr/local/cuda (if you installed another CUDA already) symbolic link.

Check compatible CUDA versions for required machine learning/deep learning frameworks/libraries you want to use:. Check the compatibility of TensorRT versions with CUDA versions and cuDNN versions:.  Check the cuDNN version with compute capability of your GPU model(s):. Check the compute capability of your GPU model(s):. Check system requirements for CUDA versions:. Check the compatibility of the NVIDIA driver version with CUDA versions:. Search and check the latest stable NVIDIA driver version for your GPU model(s):. The NVIDIA Compute Module is one way we are working to make using these technologies easier to use.Enter fullscreen mode Exit fullscreen mode Managing heterogeneous computing environments has become increasingly important for HPC and AI/ML administrators. You are now ready to start using the CUDA toolkit to harness the power of NVIDIA GPUs. A large number of packages will be installed. Select the cuda meta package and press Accept Start Yast and select Software Management” then search for cuda After adding the repository, you can install the CUDA drivers. You will be given one more confirmation screen. You must trust the GnuPG key for the CUDA repository. Information on the EULA for the CUDA drivers is displayed. Please comply with the NVIDIA EULA terms. Notice that a URL for the EULA is included in the Details section. After YaST checks the registration for the system, a list of modules that are installed or available is displayed.Ĭlick on the box to select the NVIDIA Compute Module 15 X86-64. Start Yast and select System Extensions. Note that the NVIDIA Compute Module 15 is currently only available for the SLE HPC 15 product. This module is available for use with all SLE HPC 15 Service Packs. You can select it at installation time or activate it post installation. To simplify installation of NVIDIA CUDA Toolkit on SUSE Linux Enterprise for High Performance Computing (SLE HPC) 15, we have included a new SUSE Module, NVIDIA Compute Module 15. This Module adds the NVIDIA CUDA network repository to your SLE HPC system. The NVIDIA CUDA Toolkit includes GPU-accelerated libraries, a compiler, development tools and the CUDA runtime.ĬUDA supports the SUSE Linux operating system distributions (both SUSE Enterprise and OpenSUSE) and NVIDIA provides a repository with the necessary packages to easily install the CUDA Toolkit and NVIDIA drivers on SUSE.

Check the cuDNN version with compute capability of your GPU model(s):. Check the compute capability of your GPU model(s):. Check system requirements for CUDA versions:. Check the compatibility of the NVIDIA driver version with CUDA versions:. Search and check the latest stable NVIDIA driver version for your GPU model(s):. The NVIDIA Compute Module is one way we are working to make using these technologies easier to use.Enter fullscreen mode Exit fullscreen mode Managing heterogeneous computing environments has become increasingly important for HPC and AI/ML administrators. You are now ready to start using the CUDA toolkit to harness the power of NVIDIA GPUs. A large number of packages will be installed. Select the cuda meta package and press Accept Start Yast and select Software Management” then search for cuda After adding the repository, you can install the CUDA drivers. You will be given one more confirmation screen. You must trust the GnuPG key for the CUDA repository. Information on the EULA for the CUDA drivers is displayed. Please comply with the NVIDIA EULA terms. Notice that a URL for the EULA is included in the Details section. After YaST checks the registration for the system, a list of modules that are installed or available is displayed.Ĭlick on the box to select the NVIDIA Compute Module 15 X86-64. Start Yast and select System Extensions. Note that the NVIDIA Compute Module 15 is currently only available for the SLE HPC 15 product. This module is available for use with all SLE HPC 15 Service Packs. You can select it at installation time or activate it post installation. To simplify installation of NVIDIA CUDA Toolkit on SUSE Linux Enterprise for High Performance Computing (SLE HPC) 15, we have included a new SUSE Module, NVIDIA Compute Module 15. This Module adds the NVIDIA CUDA network repository to your SLE HPC system. The NVIDIA CUDA Toolkit includes GPU-accelerated libraries, a compiler, development tools and the CUDA runtime.ĬUDA supports the SUSE Linux operating system distributions (both SUSE Enterprise and OpenSUSE) and NVIDIA provides a repository with the necessary packages to easily install the CUDA Toolkit and NVIDIA drivers on SUSE. To get the full advantage of NVIDIA GPUs, you need to use NVIDIA CUDA, which is a general purpose parallel computing platform and programming model for NVIDIA GPUs.

The CUDA Toolkit includes GPU-accelerated libraries, a compiler, development tools and the CUDA runtime. To get the full advantage of NVIDIA GPUs, you need to use the CUDA parallel computing platform and programming toolkit. Heterogeneous Computing, the use of both CPUs and accelerators like graphics processing units (GPUs), has become increasingly more common and GPUs from NVIDIA are the most popular accelerators used today for AI/ML workloads. The High-Performance Computing industry is rapidly embracing the use of AI and ML technology in addition to legacy parallel computing.

0 kommentar(er)

0 kommentar(er)